Daily Stream

CoreWeave's Strategic Expansion into Europe

CoreWeave, the US-based GPU cloud computing company founded in 2017, has smartly seized the opportunity, securing a reported $19 billion valuation following a massive $1.1 billion funding round. However, what sets CoreWeave apart from its competitors and makes their decision to expand into Europe, specifically the U.K., such a pivotal move?

OpenAI Plans to Announce Its New Search Product

As an avid follower of technological advancements in the artificial intelligence (AI) sphere, I’ve been eagerly awaiting OpenAI’s latest announcement: the launch of an AI-driven search product. This development poses a significant challenge to Google, the reigning monarch of the search engine market.

Artificial Intelligence in Military Aviation: A View from the Top

As the world grapples with the rapid advancements in AI and its potential applications, one field that has garnered significant attention is military aviation. The integration of AI into military aircraft is a game-changer, bringing both opportunities and challenges to national security, international relations, and ethics. To delve deeper into this pressing issue and gain valuable insights, we turn to Frank Kendall, the Air Force Secretary, and his perspective on this critical technological development.

Is RAG A Promising Approach or Just Another Hype?

Generative AI models, with their ability to create human-like text, images, and even music, have transformed the way we engage with technology. However, these models, with their impressive abilities, come with an inherent risk: hallucinations, where they generate false, misleading, or even fictitious information. For businesses, this can lead to erroneous decisions, reputational damage, and legal issues.

Superconducting, Trapped Ion, and Photonic Qubits

Quantum computing has witnessed a surge of innovation and progress in recent years. At the core of this budding field lies the qubit, a two-level quantum system encoding information via the unique properties of quantum mechanics. In this article, we delve into the intriguing world of qubits and compare three leading contenders in the race for quantum superiority: superconducting qubits, trapped ion qubits, and photonic qubits.

A New Wave of Optimism Returns to Quantum Computing

Quantum computing has long held a fascination, promising to surpass the capabilities of classical computers. However, the potential of this emerging field has been encumbered by a significant challenge: the instability and noise of qubits, the fundamental building blocks of quantum information.

Why the AI Chip Market Bucks Semiconductor Sector Trends

As the semiconductor industry faces cyclical downturns and uncertainty, one key question looms: why does the rapid expansion of the AI chip market defy broader sector trends? AI chips, though making up a small fraction of the overall chip market by sales volume, harbor significant potential for future growth.

Challenges of Implementing AI in Financial Cybersecurity

Artificial Intelligence (AI) is transforming the financial industry, from fraud detection to customer service, and cybersecurity is no exception. However, integrating AI into financial cybersecurity is not without challenges. In this article, we examine the two significant hurdles that financial institutions face: the lack of skilled personnel and the need for robust security measures for AI systems.

A Closer Look at Apple's Q1 2024 Revenue

Apple Inc. released its financial results for the first quarter of 2024. The company’s revenue stood at $90.75 billion, reflecting a 4% decline year-over-year. This revenue figure fell marginally short of the consensus estimate of $90.3 billion but exceeded expectations in the face of economic challenges and heightened competition. Let us delve deeper and explore the significance of this revenue figure in the context of Apple’s business. Apple reported a 4% revenue decline for Q1 2024 - a decrease that, whilst not ideal, merits a deeper analysis.

SoundCloud's Buzzing Playlists for Music Discovery

Streaming platforms have become the go-to destination for music enthusiasts seeking innovative ways to discover new sounds. SoundCloud, the leading music sharing and discovery platform, is capitalising on this trend by introducing Buzzing Playlists, a curated selection of up-and-coming tracks from emerging artists. But what criteria does SoundCloud use to highlight these tracks? As a data-driven music enthusiast, I delved deeper into this question, exploring SoundCloud’s unique approach to music curation.

Powering Data Centers with Renewable Energy

As the digital world continues to expand and the demand for data grows exponentially, the energy required to power data centers has become a significant concern for both tech giants and environmentalists. Data centers are estimated to consume around 2% of the world’s electricity, and this number is projected to double by 2026. With renewable energy becoming an increasingly viable and desirable solution for reducing carbon footprint, tech giants are leading the charge in powering their data centers sustainably.

Peloton's Cost-Cutting Measures

Peloton, the exercise equipment maker and digital fitness platform, has announced a series of cost-cutting measures aimed at improving its financial performance. Among these measures is the layoff of approximately 400 employees, or 15% of its workforce, and the departure of CEO Barry McCarthy.

Lamini Focuses On Enterprise Generative AI

According to a recent MIT Insights poll, over 75% of organizations experimented with generative AI, yet only 9% have fully adopted it. Top barriers included a lack of IT infrastructure, insufficient skills, poor governance structures, high costs, and security concerns.

The Enduring Value of Human-Curated Data in AI

As artificial intelligence (AI) technology continues to evolve at an astonishing pace, the need for high-quality training data to fuel its growth remains a crucial concern. Among the various data trends gaining attention is Reinforcement Learning with Human Feedback (RLHF). In this piece, I explore why human-curated data, specifically that derived from RLHF, will remain indispensable in the years to come.

Synthetic Data: Fueling the Future of Large Language Models

The recent surge in Natural Language Processing (NLP) and Machine Learning (ML) research has brought a heightened focus on synthetic data and its role in driving large language model development. Synthetic data, or data created by machines rather than humans, has a long history in this field, but its potential in the post-ChatGPT era is more significant than ever. In this essay, we will explore the primary arguments for and against the use of synthetic data in AI model development, with a specific focus on large language models.

Who Made GPT2-Chatbot?

The recently emerged ‘gpt2-chatbot’ has ignited excitement and curiosity in the research community with its surprising capabilities. The burning question on everyone’s mind: Does ‘gpt2-chatbot’ hold its ground against the current leader in the field, OpenAI’s GPT-4?

Weaponized AI in Cybersecurity: Top Threats for 2024

As the use of artificial intelligence (AI) in cyberattacks continues to grow, understanding the top threats facing organizations in 2024 is essential for maintaining effective cybersecurity. In this article, I outline five of the most significant threats that cybersecurity teams need to prepare for in the age of weaponized AI.

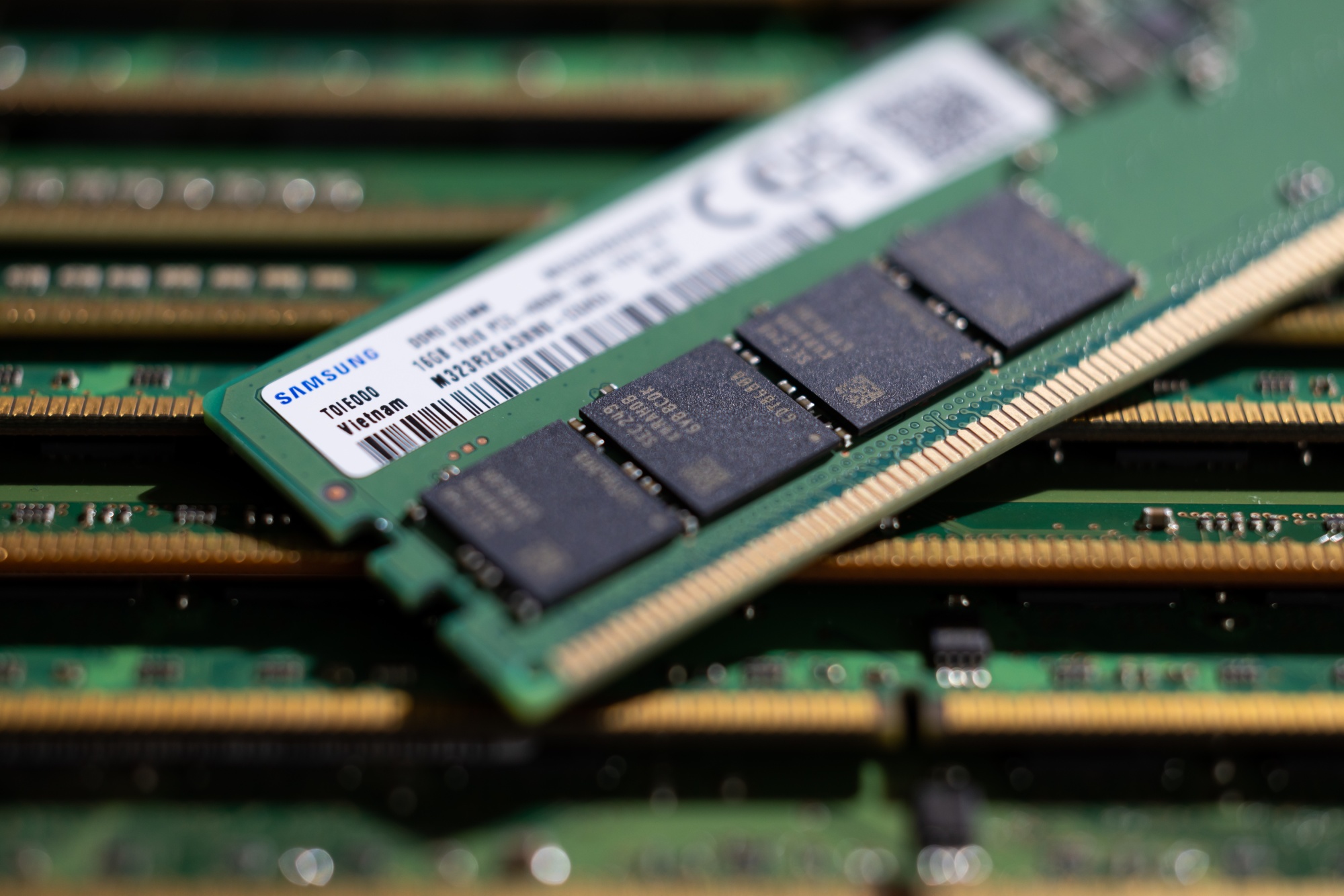

Samsung's Memory Chip Powers 930% Profit Surge in 2024Q1

South Korean electronics giant Samsung Electronics has long been a leading player, particularly in the memory chip market. However, the past year saw the company grappling with macroeconomic headwinds and weak demand for its products. Fast forward to the first quarter of 2024, and Samsung is once again in the headlines for its impressive financial performance. Operating profits surged an astonishing 930% year-on-year, reaching a record KRW 6.61 trillion ($4.77 billion).

Power of Many-Shot In-Context Learning in LLMs

As large language models (LLMs) continue to advance, their expanded input capacities, or context windows, have ushered in a new era for natural language processing applications. In this article, we explore the significance and potential of many-shot in-context learning (ICL) in enhancing the performance of LLMs on various downstream tasks.

Why Startup Founders Should Demonstrate Outsized Potential

As a founder seeking venture capital funding, navigating the complexities of the investment landscape can be a daunting task. At the heart of the venture capital model lies the power law of returns, a principle that every founder must grasp to increase their chances of securing investment. According to this rule, a small number of highly successful investments generate the majority of a VC firm’s returns, offsetting the losses from failures.