Daily Stream

Perplexity's Generative AI Search Engine

The search engine market is witnessing an intriguing evolution with players like Perplexity, ChatGPT, and Microsoft’s Bing harnessing this advanced technology to deliver more precise and contextually relevant search results. But what sets Perplexity apart from its competitors, and why is it receiving considerable investor interest?

Your Paper Is Probably Peer Reviewed by a Bot

The use of artificial intelligence (AI) tools in peer review has emerged as a controversial topic. With the rise of platforms like ChatGPT, researchers have increasingly turned to AI to evaluate others’ work and modify peer-review reports. In this article, I will provide an analysis of the extent and significance of AI-generated peer-review reports and their implications for scientific publishing, based on an article published on Nature.

AWS Bedrock's Customization Options for Generative AI

In today’s rapidly advancing AI marketplace, customization is crucial. What unique customization options does AWS Bedrock offer that differentiate it from competitors? Generative AI models can create tailor-made content suited to specific applications, but organizations may possess unique requirements or proprietary models. This need for control and flexibility demands advanced model implementation and fine-tuning capabilities from cloud providers.

Robotaxis and the Twists in Elon Musk's Vision

Elon Musk has long been synonymous with ambitious plans and bold ideas. However, over the last year, Musk’s leadership at Tesla has taken an unsteady turn. With his recent announcement of Tesla’s focus on robotaxis, the question arises: what does this strategic pivot mean for Tesla’s long-term vision, and what implications will it have on the company’s product development and employee morale?

A New Legal Framework for AI-Generated Creative Works

As artificial intelligence (AI) continues to disrupt various industries, the use of copyrighted material to fuel its creative capabilities has sparked a contentious debate. On the one hand, creators and rights-holders argue that unauthorized use of their works constitutes a violation of their intellectual property rights. Conversely, AI companies maintain that these creations represent a groundbreaking example of human-machine collaboration and innovative expression. In this article, we’ll explore the complexities of this issue and propose a workable legal framework to reconcile these compe...

The EU's Crackdown on TikTok

The European Union (EU) has taken a significant step towards regulating social media’s impact on users’ mental health by launching a second investigation into TikTok. Following an ongoing investigation into various aspects of the video sharing platform’s DSA compliance, the EU is now zeroing in on TikTok’s “Task and Reward Lite” feature.

What Are Ethical Foundations for Human-Like AI?

As the world of artificial intelligence (AI) continues to evolve, the push to design AI that mimics human traits, or pseudoanthropomorphic systems, is gaining traction. With potential benefits ranging from more engaging and emotionally responsive interactions to increased efficiency and personalization, these developments present an enticing opportunity for the tech industry. However, as we delve deeper into this realm, pressing ethical concerns emerge, compelling us to reconsider the role of trust, mental health, and tech leaders in shaping the future of human-like AI.

Who Owns the IPR in AI-Generated Content?

As AI technologies generate increasingly human-like content, the legal and ethical implications surrounding intellectual property rights have become a topic of intense debate. This essay explores the complex issue of who owns the rights to AI-generated content, especially when it comes to the use of copyrighted material.

We Must Revamp Our Approach to Evaluating AI Systems

As we continue to push the boundaries of artificial intelligence (AI) technology, it has become increasingly clear that our traditional evaluation methods are no longer fit for purpose. The limitations of these methods are being exposed as more sophisticated AI models come to market, and the consequences of using untrustworthy or ineffective technology in businesses and public bodies could be significant.

Global Investment in AI Is Declining, Research Shows

Recent data points to a shift in the AI investment landscape, with global investment in AI declining for the second consecutive year, according to Stanford’s Institute for Human-Centered Artificial Intelligence (HAI). So, what’s driving this trend, and what does it mean for the future of AI investments? I believe I have some insights to share.

Generative AI and the Future of Democratic Processes

As artificial intelligence (AI) continues to permeate our society, the potential implications for democratic processes have become a subject of intense debate. Nick Clegg, Meta’s global affairs chief, contends that generative AI poses little threat to democracy, but a closer examination of the evidence is required to separate reality from speculation.

Tech Companies' Desperate Pursuit for Data

As the global race to lead in advanced artificial intelligence (AI) technology intensifies, companies such as OpenAI, Google, and Meta are grappling with a significant challenge: acquiring sufficient data to fuel their AI systems’ growth while remaining ethical and compliant with copyright laws and company policies. To stay competitive and overcome data scarcity issues, these giants have reportedly cut corners, ignored corporate guidelines, and even debated testing the legal waters.

Generative AI Is Not As Open As It May Seem

The debate between open and proprietary systems continues to garner significant attention. The crux of the matter: why a renewed emphasis on open vs. proprietary AI models? From my vantage point, this discourse holds immense importance for shaping the future of AI research and development.

AI and Human Expertise in Materials Science

As a researcher, I have long been captivated by the potential of artificial intelligence (AI) to accelerate our understanding of new materials and their properties. The promise of AI offers an alternative to the traditional trial-and-error methods and costly experimental procedures that have long characterized materials science. But the crucial question remains: how does AI’s capability in discovering new materials compare to the expertise of human materials scientists?

A Dive into AI Strategies for the US, UK, and Europe

As the competition to develop and train the most advanced artificial intelligence (AI) models intensifies, the geopolitical implications become increasingly apparent. With China emerging as a significant player in this domain, it is essential for the US, UK, and Europe to retain control over their data, talent, and AI model training. In this essay, I will explore two potential strategies and the challenges associated with each approach.

Meeting the Electricity Demands of the AI Industry

According to industry leaders, electricity supply is the latest chokepoint for AI, with billion-dollar investments being made in computing infrastructure by major tech companies. The construction of data centres, which house the physical components underpinning computer systems, requires careful planning and construction, which can take several years to complete.

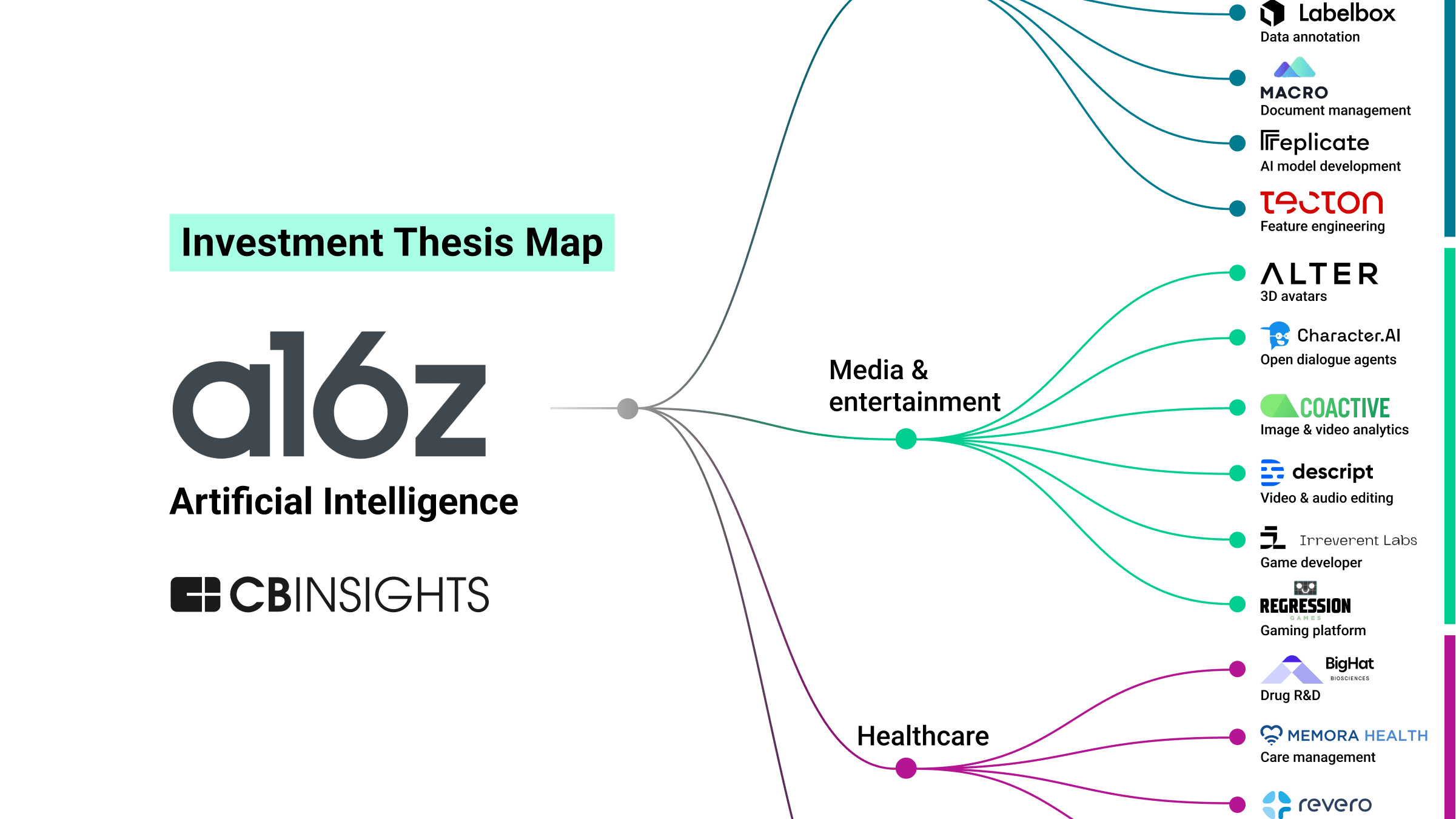

How Will VCs Refine Their AI Investment Strategy

The emergence of generative artificial intelligence (AI) has sent ripples through the venture capital (VC) industry. Foundation models, such as those developed by OpenAI and Anthropic, have captured the public’s imagination with their human-like language understanding and impressive capabilities. Consequently, these models have attracted significant investments and high valuations.

How Cherub Connects Investors and Entrepreneurs

As the co-founder of Pathfinder Ventures, I have long pondered the best ways to efficiently discover the right startups that align with my investment focus. Enter Cherub, a new platform that appears to redefine how investors and entrepreneurs interconnect – and I was eager to uncover the specifics behind their innovative matching process.

Resurrecting Venture Capital's Sluggish Fundraising

The venture capital industry now faces a sustained fundraising slump. With global venture firms securing just $30.4bn in the first quarter of 2023, it’s clear that a significant challenge lies ahead. While multiple factors have contributed to this downturn, I argue that the exit market stands out as the most pressing one.

Artificial Intelligence in Ukraine's Military Strategy

As the conflict between Ukraine and Russia continues, the significance of technological innovation in shaping the outcome of this protracted war cannot be overstated. One such technology that has emerged as a game-changer in the fight against Russia’s military superiority is artificial intelligence (AI). In particular, Ukraine’s military intelligence has been leveraging AI to identify valuable targets and detect enemy movements, providing them with a much-needed strategic edge.