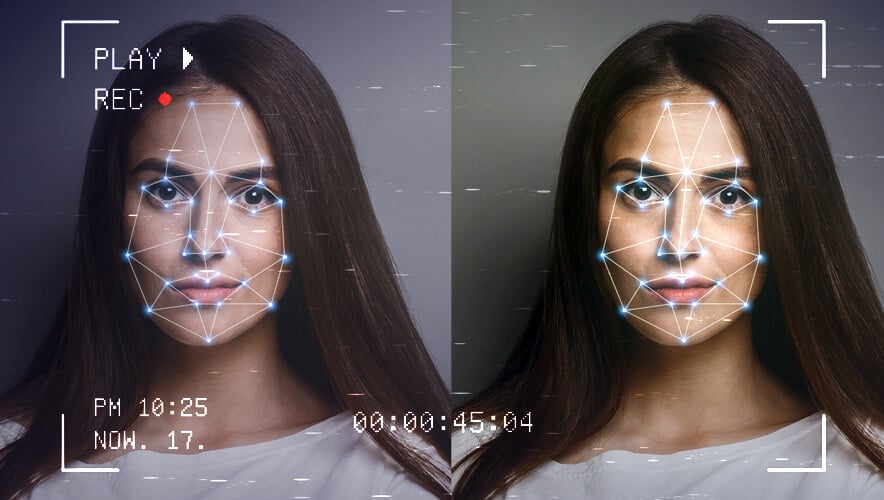

The proliferation of deepfake technology has become a major concern in our digital age. Deepfakes, which refer to manipulated media that use artificial intelligence to create hyper-realistic videos or images of individuals saying or doing things they never did, pose a significant threat to information integrity and personal privacy. As an expert in the field, I believe that the question of how easily deepfakes can be created and distributed, and what measures can be taken to prevent this, is of paramount importance.

The ease with which deepfakes can be generated is a cause for concern. Advanced deepfake technologies are readily accessible, and their use is not limited to tech-savvy individuals or organizations. In fact, deepfakes can be generated using simple software and tools, such as those offered by HeyGen or Synthesia, at relatively low cost. As a result, the market for deepfake videos and images is rapidly growing, and their potential uses range from trivial to dangerous.

Moreover, deepfakes’ anonymity and diffusion through social media platforms such as YouTube, TikTok, Instagram, and others make it challenging to trace their origins and hold creators accountable. Deepfakes can spread virally, reaching millions of people in a matter of hours. The stakes are high, as deepfakes can be used to manipulate public opinion, sow discord, and even pose a threat to national security.

To counter the proliferation of deepfakes, robust measures are necessary. Social media platforms and technology companies must invest in advanced detection and removal systems to identify and flag deepfakes. This requires a combination of human and artificial intelligence efforts, as well as collaboration between experts in computer science, psychology, and law enforcement.

Second, legal frameworks are essential to provide clear guidelines on the creation, distribution, and use of deepfakes. This includes criminalizing the dissemination of deepfakes that cause harm or misrepresent individuals without their consent. Such measures will serve not only to deter deepfake creators but also to provide a sense of security and trust for online users.

Third, education and awareness campaigns are crucial. Most people are unaware of deepfakes’ potential risks and how to identify them. There is a need for public education initiatives, such as PSAs, school curricula, and media campaigns, to help people understand the dangers of deepfakes and learn ways to identify them.

Fourth, technology companies and content creators must adopt stricter guidelines and verification processes to prevent the misuse of their platforms and tools for creating deepfakes. This requires a delicate balance between user privacy and preventing harm. Companies can take steps such as implementing strict user verification processes, limiting access to deepfake creation tools, and utilizing advanced algorithms to detect potential deepfakes.

Lastly, it is vital to remember that deepfakes are not solely a technological issue but a societal one. Addressing deepfakes effectively necessitates a collective effort from all stakeholders, including individuals, media organizations, policymakers, and technology companies. The potential consequences of inaction are too severe.

In conclusion, deepfakes pose a significant threat to the trustworthiness and integrity of information and communication in the digital age. To mitigate their impact, it is crucial to invest in advanced detection and removal systems, implement legal frameworks, educate the public, and adopt stricter verification processes. By working together, we can limit the use of deepfakes for malicious purposes and promote a more secure and trustworthy online environment.