Neuromorphic computing stands at the intersection of technology and biology, embodying an innovative approach that draws inspiration from the most complex system known to us: the human brain. This burgeoning field promises to revolutionize the realms of artificial intelligence (AI), deep learning, and autonomous systems by emulating the structure and functionality of neurons and synapses. Its potential to surpass traditional computing limitations, such as those defined by Moore’s Law, and edge closer to the holy grail of artificial general intelligence (AGI) is both exciting and profound. However, despite its promise, neuromorphic computing is still in its infancy, grappling with challenges that range from accuracy and software limitations to accessibility and the establishment of benchmarks.

Challenges in Neuromorphic Computing

Algorithmic Development and Application

While neuromorphic computing shows promise, its evolution is significantly hampered by the focus primarily on hardware development rather than on algorithms and applications. Unlike DNNs, which have matured through extensive research on both hardware and software fronts, neuromorphic computing is still exploring effective algorithmic strategies that leverage its unique capabilities. This discrepancy underscores a critical challenge: the development of neuromorphic-specific algorithms that can fully utilize the architecture’s potential for parallel processing, low power consumption, and inherent adaptability.

Accessibility and Usability

Neuromorphic systems are currently not as accessible to non-specialists as DNNs, largely due to the complexity of their design and the lack of user-friendly programming interfaces. DNN technologies have been integrated into widely accessible libraries and frameworks, making them more approachable for a broader audience. In contrast, neuromorphic computing requires a paradigm shift in programming approaches, making it less accessible and slowing its adoption and innovation rate.

Performance Metrics and Benchmarks

Another challenge lies in the lack of established benchmarks and performance metrics for neuromorphic computing, making it difficult to measure progress and compare with DNNs directly. DNNs have benefited from standardized datasets and benchmarks that have driven competition and innovation in the field. Neuromorphic computing needs similar benchmarks that not only showcase its strengths but also align with real-world applications to demonstrate its practical advantages over DNNs.

Software-Hardware Co-Design

The development of neuromorphic computing has highlighted the importance of software-hardware co-design, an area where DNN development has already made significant strides. Effective neuromorphic computing requires algorithms that match its hardware’s specific features, such as spiking behavior and temporal dynamics, which is a departure from the more uniform computational models used in DNNs. This co-design approach is crucial for unlocking neuromorphic computing’s potential but remains a significant challenge due to the nascent state of both neuromorphic hardware and software ecosystems.

To bridge these gaps, the neuromorphic computing field must focus on developing a robust ecosystem around neuromorphic algorithms and applications. This involves creating accessible programming models and languages tailored to neuromorphic hardware, establishing clear benchmarks and performance metrics, and fostering a community that encourages open collaboration and sharing of resources and knowledge.

Neuromorphic Computing vs. Deep Neural Networks

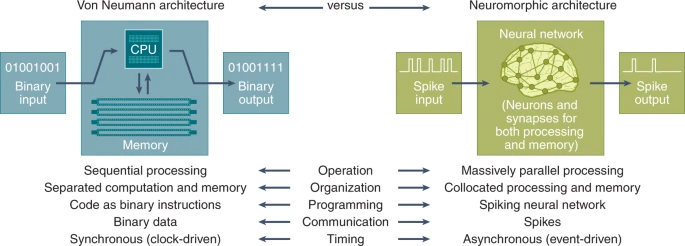

Neuromorphic computing and Deep Neural Networks embody two distinct architectures tailored to their specific computational goals and philosophies. Neuromorphic computing architecture is inspired by the biological brain, emphasizing parallel processing, event-driven computation, and collocated memory and processing akin to neurons and synapses. This design enables neuromorphic systems to efficiently handle tasks involving pattern recognition, sensory data processing, and learning from temporal dynamics, all while maintaining low power consumption. In contrast, DNN architectures leverage layered networks of artificial neurons where data and computations are processed in a highly structured, albeit less temporally dynamic, manner. DNNs typically run on conventional computing hardware, such as GPUs, which are optimized for the high-speed matrix operations central to deep learning tasks.

This difference in architecture underpins the neuromorphic approach’s potential for energy-efficient, adaptive computation, while DNNs excel in tasks requiring substantial computational power and large datasets, benefiting from the existing, mature ecosystem of software tools and hardware accelerators. Thus, the architectural distinctions between neuromorphic computing and DNNs highlight a trade-off between mimicking biological efficiency and adaptability versus harnessing brute computational strength and established development frameworks.

To elaborate further, wWhen comparing neuromorphic computing to DNNs, several key differences emerge:

-

Energy Efficiency and Processing Speed: Neuromorphic chips are designed to mimic the brain’s energy efficiency and parallel processing capabilities closely. They hold the potential to perform computations faster and with significantly less energy than traditional DNNs, particularly for tasks like pattern recognition and sensory data processing.

-

Adaptability and Learning: Neuromorphic systems aim to replicate the brain’s ability to learn from unstructured data in real-time, offering a level of adaptability and online learning that DNNs struggle to match. DNNs, while powerful, require extensive training datasets and often lack the ability to adapt to new information without retraining.

-

Hardware Utilization: DNNs typically run on conventional hardware, including GPUs and CPUs, which can lead to inefficiencies such as the von Neumann bottleneck. In contrast, neuromorphic computing integrates memory and processing in a way that mimics biological neurons, potentially overcoming these limitations.

-

Scalability and Fault Tolerance: Neuromorphic systems are inherently scalable and exhibit a high degree of fault tolerance, similar to the human brain. DNNs, on the other hand, can become increasingly complex and resource-intensive as they scale, and they are less tolerant of errors and hardware failures.

Despite these distinctions, both neuromorphic computing and DNNs offer valuable pathways toward advancing AI and understanding human cognition. The challenges facing neuromorphic computing are substantial but not insurmountable. With continued research and development, neuromorphic systems may well achieve their promise of providing more efficient, adaptable, and intelligent computing solutions, complementing and, in some cases, surpassing the capabilities of deep neural networks.

From Theory to Practice

As we peer into the future of neuromorphic computing, a critical question emerges: What practical steps are necessary to move this technology from theoretical models and small-scale experiments to real-world applications that can fully leverage its groundbreaking potential?

The path to real-world application is multifaceted. Overcoming technical challenges, such as enhancing accuracy and developing neuromorphic-specific software and algorithms, is an immediate priority. This technical evolution must be complemented by the creation of new programming languages and computational environments designed specifically for neuromorphic architectures. Moreover, fostering interdisciplinary collaboration is vital. Teams combining expertise in computer science, neuroscience, and engineering are essential to translate the complex functionalities of the human brain into tangible computing solutions.

Moreover, establishing clear benchmarks and performance standards will be crucial in guiding the technology’s development and ensuring its adaptability and efficacy in solving real-world problems. Such standards will not only facilitate the assessment of neuromorphic systems but also drive innovation by setting clear goals for researchers and developers.

The commitment of major tech companies, research institutions, and government entities to neuromorphic computing is a testament to its potential. By addressing these challenges head-on, we can accelerate the transition of neuromorphic computing from an exciting theoretical concept to a transformative technology with widespread applications in AI, autonomous vehicles, the Internet of Things (IoT), and beyond.