A recently proposed approach known as Direct Preference Optimization (DPO) is setting a new standard for training large language models (LLMs). This novel method is redefining efficiency and effectiveness in the AI domain, addressing the complex challenge of aligning machine intelligence with human expectations.

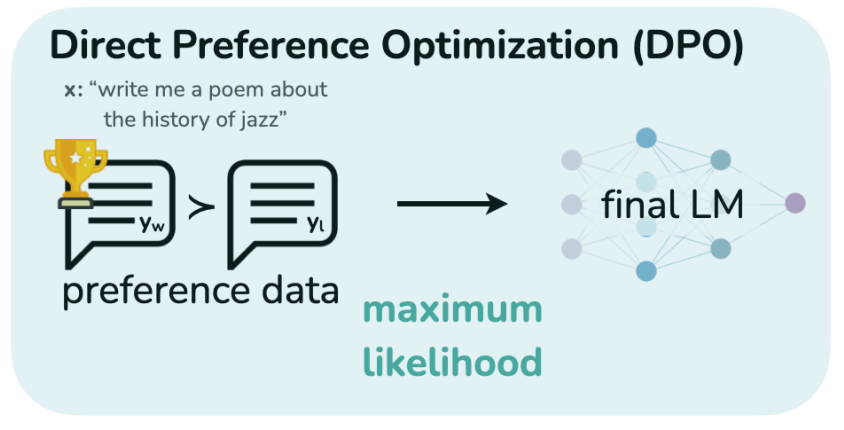

DPO simplifies the traditionally complex and resource-intensive process of fine-tuning LLMs. Unlike its predecessor, reinforcement learning from human feedback (RLHF), which required the laborious step of training a separate reward model, DPO streamlines this by directly integrating human feedback into the training process.

The innovation of DPO lies in its elimination of the separate reward model. Instead, DPO directly integrates human feedback into the training process of the LLM. This direct approach allows for immediate adjustments based on human preferences, significantly speeding up the training process. By focusing directly on the preferences indicated by human feedback, DPO makes the training more efficient and effective. This efficiency comes from removing the need to interpret human feedback through an additional model, reducing both the computational resources required and the potential for misinterpretation of human intentions.

This direct approach not only accelerates the fine-tuning of LLMs but also enhances their ability to understand and respond to human nuances more accurately. The remarkable Mixtral 8x7B, a Sparse Mixture of Experts model developed by Mistral, achieved the performance benchmark of LLaMa 70B with far fewer parameters through the use of DPO.

The implications of DPO are profound. Its efficiency, marked by a three to six times improvement over RLHF, opens the doors for smaller companies to enter the competitive arena of AI development. Previously, the domain was dominated by giants with the resources to manage the cumbersome RLHF process. Now, with DPO, the playing field is becoming more leveled, democratizing access to high-quality AI development.

Yet, an intriguing question emerges from this innovation: How does DPO maintain the balance between effectively incorporating human feedback and avoiding the incorporation of human biases into the LLM? This concern is not trivial. The essence of DPO’s success lies in its ability to draw from a diverse and comprehensive pool of human feedback. The diversity ensures a broad spectrum of human perspectives, mitigating the risk of reinforcing narrow, biased views. Furthermore, the inclusion of both positive and negative feedback allows for a more nuanced understanding and refinement of the AI’s outputs, potentially reducing the imprint of individual biases.

However, the path is not without its challenges. Ensuring the feedback pool remains diverse and representative requires constant vigilance. Moreover, the dynamic nature of human preferences means that what is considered unbiased today may not hold tomorrow. Continuous monitoring, updating of feedback mechanisms, and ethical considerations are paramount to sustainably integrating DPO into AI development.